Application Developers

Sounds like a great idea. We hear from leadership and manager who really appreciate the value of good data but this simple statement belies the fact that fixing data the right way, in reality, is just not that easy.

Because many business data users typically know the value of good data they quite naturally try to correct bad data whenever and wherever they find it. These are the best of intentions and in fact managers may encourage business user to do this. It just seems like the right thing to do. In small workgroups this may actually be a good method of improving data quality however, in specialized workgroups, especially in larger enterprises, this may not the best idea in fact it may cause problems and drive cost up.

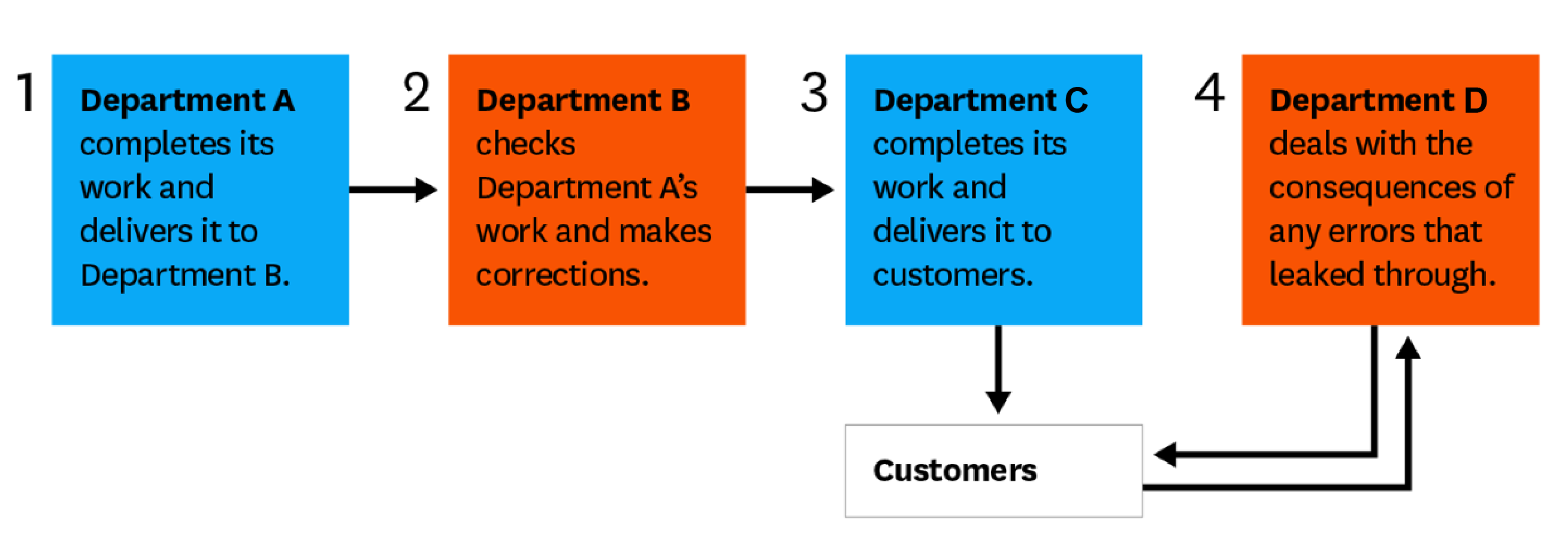

As companies and organizations grow in scale uncoordinated individual efforts are less and less effective at resolving the issue of bad data. These individual efforts can lead to the evolution of an organic process, termed a “Hidden Data Factory” (HDF). This process occurs in a workflow in which data is passed from one department to another. This graphic, slightly modified illustration based on work by Thomas Fredman, HBR.org, illustrates the scenario.

Seems all well and good on the surface but in reality this gives only temporary relief from the problem in one department. The problem is the data errors are never addressed at their root, i.e the Authoritative Data Source(ADS)[1]. The fixes may work for one department and some processes downstream but all these corrections can be quickly washed away when the next batch of records arrive from the same upstream ADS with the same errors.

A broad Data Governance strategy developed in collaboration with stakeholders and the business users, especially, who experience the pain of bad data first hand, can be the best defense against HDF!

First step to eliminating HDF is recognizing the problem. If there is little or no communication between departments or no mechanism for reporting data issues to higher authority, such as a Chief Data Officer, HDF will continue to operate.

So while recognizing and reporting data quality issues is everyone’s responsibility the ultimate responsibility for implementing the best solution rest with leadership and the existence of a Data Governance Framework. Without a comprehensive strategy and a common understanding about the value of Data Quality the status quo will continue to prevail. Here is an illustration I created identifying the key elements for Data Governance Framework.

source: https://www.alqemy.com/lab/dgcl/

If your organization is struggling with Data Quality I hope you find this checklist helpful.

Ben Marchbanks